This post attempts to document the steps required to create a decent microservices development workflow using Docker & Docker-compose for a Nodejs, express application. The goal here is to make sure our development workflow is as seamless as possible.

Objectives

- Spin up a fully working version of our node, mongo microservice just by running docker-compose up

- Docker workflow should mimic what we are used to within a nodejs workflow using nodemon, webpack and webpack-dev-server. All changes instantly reflected in our app without any restarting of the docker containers

- Use data containers for initializing mongodb

Setup a simple express application and create a Dockerfile & docker-compose.yml file

Create a super simple node express application that listens on port 3000. The goal is to dockerize this application and create a development workflow using docker containers.

> mkdir docker-node-mongo > cd docker-node-mongo > npm init > npm install --save express > npm install --save nodemon

# create an app.js file with the following contents

var express = require('express');

var app = express();

app.get('/', function(req, res){

res.send("Hello World");

});

app.listen(3000, function(){

console.log('Example app listening on port 3000!');

});

# add the following to package.json scripts section

"scripts": {

"start": "nodemon app.js"

},

> npm start Example app listening on port 3000!

# test > curl -i http://localhost:3000/

At this point you should have a locally running node express application that responds with “Hello World” for http get requests.

Dockerizing the node express application

It is actually super simple to dockerize this application and run it in a docker container.

# create a file named Dockerfile

FROM node:argon

RUN mkdir /app WORKDIR /app

COPY package.json /app

RUN npm install

COPY . /app

EXPOSE 3000

CMD ["npm", "start"]

To test this in a docker container we need to build this image & then run it.

> docker build -t node-test:0.1 .

The build step builds the image using the node:argon official node image and then copies the source into the image. This step only builds the image. Note:- the . at the indicates the current directory where Dockerfile is located

> docker run -p 3000:3000 -ti node-test:0.1 Example app listening on port 3000!

# test > curl -i http://localhost:3000/ Hello world

Docker-compose to build and run the container and map host app directory into the container

This step uses docker-compose to orchestrate our containers. It is a super cool tool and allows to start all our dependencies with just one command “docker-compose up”

# create a docker-compose.yml file version: "2" services: web: build: . volumes: - ./:/app ports: - "3000:3000"

This file is building the image if not already present, mounts the host directory on the container in /app and starts the container. The end result is that with one command it will initialize and run our containers. Mounting host volumes has the added advantage of keeping development workflow the same as this running locally. But, the biggest benefit is now anyone can clone the github repository and run docker-compose up to get a clean development environment. Pretty neat!!

> docker-compose up

Build the mongo dependency into the express application

Add mongoose to the app “npm install — save mongoose” and connect the app to the mongo db.

var express = require(‘express’);

var app = express();

var mongoose = require(‘mongoose’);

//DB setup

mongoose.connect(“mongodb://mongo:27017”);

app.get(‘/’, function(req, res){

res.send(“Hello World-changed-1 “);

});

app.listen(3000, function(){

console.log(‘Example app listening on port 3000!’);

});

Add mongodb entry into the services section for the docker-compose file. The links tag allows to link services between containers. The ‘mongo’ service name is also added to the /etc/hosts in the container, allowing to access the service as such: mongodb://mongo:27017

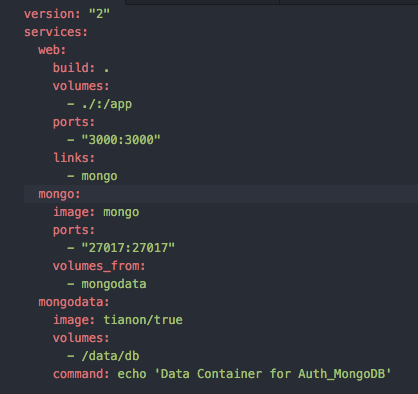

version: “2” services: web: build: . volumes: — ./:/app ports: — “3000:3000” links: — mongo mongo: image: mongo ports: — “27017:27017”

Just running docker-compose up will start both the services, web & mongo.

Abstract data into data containers

The mongo data can be abstracted into a docker data container allowing for maximum portability. By doing this, multiple instances can share the same data container and also allows for easy backup and restore functionality.

Create a new service called mongodata and using volumes_from will allow the mongo database container instance to share the /data/db mounted volume across containers.